Determining Material Composition using Deep Hyperspectral Image Unmixing

Description

Linear unmixing assumes that the observed spectrum in a pixel is a linear combination of the spectral

signatures of the constituent materials. Mathematically, this can be represented as:

D=S.A + E Where D is the observed hyperspectral pixel spectrum, S is a matrix containing the spectral signatures of the materials (endmembers), A is a column vector representing the abundance fractions of each endmember and E is an error term. On the other hands, non-linear unmixing methods consider the nonlinear mixing effects caused by various factors such as illumination conditions, atmospheric effects, and complex interactions between materials. Advanced machine learning techniques, including deep learning models like convolutional neural networks (CNNs) and transformers, have shown promise in capturing these non-linear relationships.

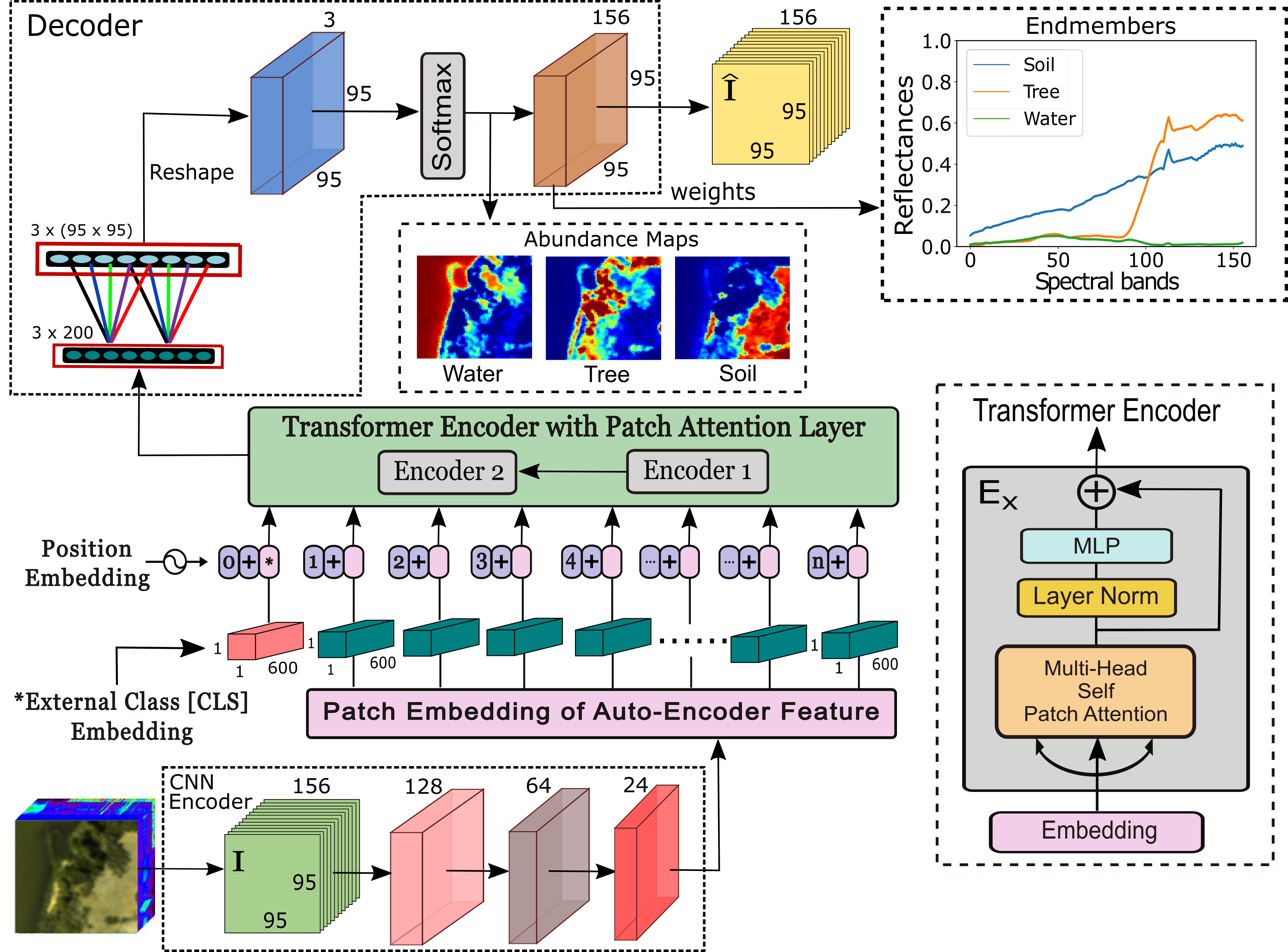

Hyperspectral unmixing involves decomposing mixed pixel spectra into their constituent endmembers and corresponding abundance fractions. Traditional methods face challenges in capturing complex spectral relationships, making it imperative to explore advanced techniques such as transformer-based networks. This exploration aims to identify a suitable network architecture for hyperspectral unmixing. Transformers have intrigued the vision research community with their state-of-the-art performance in natural language processing. With their superior performance, transformers have found their way in the field of hyperspectral image classification and achieved promising results. In this work, we harness the power of transformers to conquer the task of hyperspectral unmixing and propose a novel deep neural network-based unmixing model with transformers. A transformer network captures non-local feature dependencies by interactions between image patches, which are not employed in CNN models, and hereby has the ability to enhance the quality of the extracted endmember spectra and the abundance maps. The proposed model is a combination of a convolutional auto encoder and a transformer. The hyperspectral data is encoded by the convolutional encoder and integrating transformer architectures into hyperspectral unmixing models brings the advantage of capturing long-range dependencies and non-linear relationships between the representations derived from the encoder. The data are reconstructed using a convolutional decoder. Transformers excel in sequence modeling tasks, making them suitable for learning complex patterns in hyperspectral imagery. We applied the proposed unmixing model to three widely used unmixing datasets, i.e., Samson, Apex, and Washington DC mall and compared it with the state-of-the-art in terms of root mean squared error and spectral angle distance.

Approved Objectives of the Proposal:

D=S.A + E Where D is the observed hyperspectral pixel spectrum, S is a matrix containing the spectral signatures of the materials (endmembers), A is a column vector representing the abundance fractions of each endmember and E is an error term. On the other hands, non-linear unmixing methods consider the nonlinear mixing effects caused by various factors such as illumination conditions, atmospheric effects, and complex interactions between materials. Advanced machine learning techniques, including deep learning models like convolutional neural networks (CNNs) and transformers, have shown promise in capturing these non-linear relationships.

Hyperspectral unmixing involves decomposing mixed pixel spectra into their constituent endmembers and corresponding abundance fractions. Traditional methods face challenges in capturing complex spectral relationships, making it imperative to explore advanced techniques such as transformer-based networks. This exploration aims to identify a suitable network architecture for hyperspectral unmixing. Transformers have intrigued the vision research community with their state-of-the-art performance in natural language processing. With their superior performance, transformers have found their way in the field of hyperspectral image classification and achieved promising results. In this work, we harness the power of transformers to conquer the task of hyperspectral unmixing and propose a novel deep neural network-based unmixing model with transformers. A transformer network captures non-local feature dependencies by interactions between image patches, which are not employed in CNN models, and hereby has the ability to enhance the quality of the extracted endmember spectra and the abundance maps. The proposed model is a combination of a convolutional auto encoder and a transformer. The hyperspectral data is encoded by the convolutional encoder and integrating transformer architectures into hyperspectral unmixing models brings the advantage of capturing long-range dependencies and non-linear relationships between the representations derived from the encoder. The data are reconstructed using a convolutional decoder. Transformers excel in sequence modeling tasks, making them suitable for learning complex patterns in hyperspectral imagery. We applied the proposed unmixing model to three widely used unmixing datasets, i.e., Samson, Apex, and Washington DC mall and compared it with the state-of-the-art in terms of root mean squared error and spectral angle distance.

Approved Objectives of the Proposal:

- To estimate the composition of each hyperspectral pixel.

- To collect the ground truth spectral signatures of pure materials.

- To explore various transformer based networks for hyperspectral unmixing and find a proper network to solve the stated problem.

- To produce land use and land cover (LULC) maps from hyperspectral image in unsupervised manner.

Details

| Start date | 14.12.2022 |

| End date | 13.12.2024 |

| Project Cost | 13,87,560 INR |

| Junior Research Fellow | Available |

| Funding Agency | Science and Engineering Research Board, Govt. of India |

Publications

- WetMapFormer: A Unified Deep CNN and Vision Transformer for Complex Wetland Mapping. Ali Jamali, Swalpa Kumar Roy, and Pedram Ghamisi. International Journal of Applied Earth Observation and Geoinformation, vol. 120, p. 103 333, 2023.

- TransU-Net++: Rethinking Attention Gated TransU-Net for Deforestation Mapping. Ali Jamali, Swalpa Kumar Roy, Jonathan Li, and Pedram Ghamisi. International Journal of Applied Earth Observation and Geoinformation, vol. 120, p. 103 332, 2023.

- Multimodal Fusion Transformer for Remote Sensing Image Classification. Swalpa Kumar Roy, Ankur Deria, Danfeng Hong, Behnood Rasti, Antonio Plazza, and Jocelyn Chanussot. IEEE Transactions on Geoscience and Remote Sensing, Volume: 61, 2023.

- Spectral-Spatial Morphological Attention Transformer for Hyperspectral Image Classification. Swalpa Kumar Roy, Ankur Deria, Chiranjibi Shah, Juan M. Haut, Qian Du, and Antonio Plaza. IEEE Transactions on Geoscience and Remote Sensing, volume 61, 2023.

- Deep Hyperspectral Unmixing using Transformer Network. Preetam Ghosh, Swalpa Kumar Roy, Bikram Koirala, Behnood Rasti, and Paul Scheunders. IEEE Transactions on Geoscience and Remote Sensing, Volume: 60, 2022.

Dr. Swalpa Kumar Roy

Assistant Professor, AGEMC

West Bengal

Principal Investigator

Sponsored by